At Microsoft Build ’23, Satya Nadella introduced Fabric as Microsoft’s largest data product after SQL Server. Since then, it has become a hot topic in the world of data analytics. To further pique our interest, we offered free trial access to Microsoft’s Fabric service to try out the SaaS product. Learning and understanding the capabilities of Fabric has been an exciting experience for our team. Our experience resonated with what was shared in the keynote during the build. In fact, Fabric is a unified experience for every data professional.

Microsoft Fabric is a comprehensive cloud solution that combines data movement, engineering, integration, science, real-time analytics, and PowerBI reporting in a user-friendly, all-in-one SaaS package. Built on an integrated analytics solution that provides organizations with robust data security, governance, and compliance.

In the future, organizations will no longer need to manually combine data engineering services, but instead will need to use a single platform to connect, extract, transform, and load. This integrated platform prepares data for data science and business intelligence reporting by unifying all disparate analytics services under one umbrella.

Microsoft Fabric Overview

The Fabric image below summarizes the capabilities that SaaS can offer.

OneLake is an open storage layer. Data is stored in Delta Parquet format, which is open source and used by several data products such as Databricks. Next is serverless with Fabric, a compute layer that is completely separate from storage. These compute tiers ensure efficient use and maximum cost savings.

The 7 main components of Fabric:

1. Data Factory is a mature version of Azure Data Factory that is currently used by data engineers. This integrates with dataflow gen2. It comes with over 170 connectors and over 300 ready-to-use templates for conversion. One of the most common conversions performed using ADF today is ‘copy’. Fabric introduces ‘FastCopy’ to move data between data stores.

2. Synapse Data Engineering is the notebook component of Fabric. The notebooks are designed to run on the Spark framework, which is fully customizable with delta optimizations like V-order.

3. Synapse Data Science In addition to access to Data Wrangler’s low-code data preparation tools, it provides a rich set of built-in ML tools. SynapseML is a newly introduced simple distributed machine learning library for Spark.

4. Synapse Data Warehouse It is built to support open data formats without compromising governance and security.

5. Real-time analysis It integrates seamlessly with all other Fabric components, allowing users to gain insights from real-time data sets without much work.

6. Power BI It is a core component of Fabric. Alternatively, Fabric was introduced as a premium offering in Power BI. Visualization tools have been enhanced with new features like Copilot and Autocreate.

7. Data activation device Monitor data patterns and trigger alerts based on established criteria. All of this requires no code.

Implementing a data engineering focus in Fabric

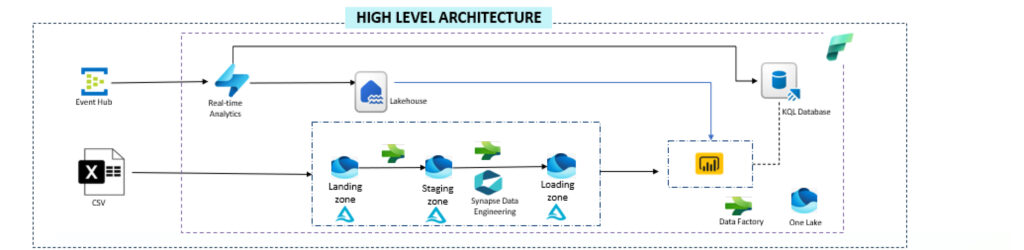

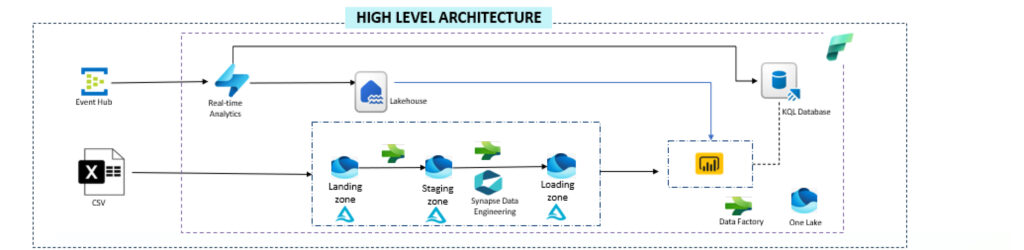

I had a telemetry use case that I wanted to implement in Fabric. Our goal was to collect both batch and real-time telemetry data from sources, process it through our lake house and KQL database, and then visualize it using Power BI.

We decided to collect data related to battery health and capacity, streaming some fields in real time and capturing others in batches. To implement this project, we assumed that some fields in the dataset would be streamed in real time and other fields would be captured in batches. We created a custom framework with configuration tables. This table contains the target table name to load and the load mode (incremental or truncated load). Based on the passed table name, the corresponding notebook was called for processing. The target table was always a lake house table, and a stored procedure was called to load that target table.

Created shortcuts to load CSV files as datasets in ADLS gen2 and source batch datasets in OneLake. For real-time data, we used Azure Event Hubs to generate the data and Event Stream to consume it. Real-time data was analyzed using the KQL database and Power BI, and data was loaded after conversion in Lake House. We used copy activity to move data from the lakehouse to the data warehouse for reporting purposes. We created a detailed report in PowerBI using battery health data to gain some insights.

Lessons learned from implementation

Fabric is an integrated platform for data analytics solutions. Microsoft developed this product to make the lives of data engineers easier.

I created a Databricks notebook to access and process OneLake datasets. Integration was possible and seemed easier than ADLS integration. This provides options for integrating Databricks with Fabric.

For example, a data factory does not require you to define additional linked services or sink objects. Easier to integrate with any source. You also don’t have to post every time there’s a change. It will be saved automatically. We now integrate directly with OneLake, eliminating the need to use Azure Key Vault. Task monitoring is covered in detail in Fabric, and now you can even monitor cross-workspaces.

Data engineering notebooks enable a high level of collaboration. Multiple team members can work on one laptop at the same time. The interface is simple to use. Although the underlying Spark framework provides powerful performance out of the box, it is highly customizable. There is a function to call a notebook within a notebook, which is very convenient for modularizing the code.

Real-time analytics is very mature in Fabric, allowing data coming in through a KQL database to be explored and accessed immediately. For this component, Spark Structured Streaming is not required. This is a big change in Azure service offerings. Native partitioning and indexing help ensure high performance of your data.

What makes fabric a crowd-puller?

Data Exploration

As cloud adoption continues to grow, organizations have transitioned from ETL to ELT. Nearly 90% of organizations have moved their data to the cloud for future analysis. However, they often struggle to understand what data lives where, resulting in what is now called a data swamp. OneLake can help these organizations by providing a better understanding of their data, giving business professionals more details to enhance data sets for faster insights. OneLake shortcuts allow you to access and analyze data from various repositories using Power BI Direct Lake mode.

data integration

With the right access set, data integration from multiple sources can be supported and easily merged with source analytics in a single UI. In many implementations, users lose trust in the data set because they cannot be sure that the values displayed are correct. This is mainly due to lack of traceability. Fabric can reveal data lineage that will alleviate that inhibition in the minds of business users.

The integration overhead of large-scale implementations makes life difficult for developers. Fabric provides seamless connectivity between various services and fully managed products, reducing developer effort by 20-30% depending on experience.

Cost and AI integration

Separating compute and storage cost calculations is a significant advantage. Because OneLake is open and accessible to everyone, it already has optimized storage. Compute pools are reused according to the execution timeline. It’s up to the team to plan job execution in a way that the batch job uses the same pool when used for PowerBI reports in the morning.

AI integration is being implemented at every layer of the Fabric. Co-pilots exist in each component of the fabric, and task processing is optimized based on previous execution. This is an important point of differentiation.

fabric adoption

Microsoft has made its plans clear for existing customers. They have always cherished them and have plans for the Fabric movement. ADF installation is a service provided to existing ADF users. The Synapse migration pipeline is available, but not yet defined. None of the existing components strengthened by Fabric will be retired anytime soon. Interestingly, many of the Amazon S3-related OneLake features have been introduced as part of storage access, meaning cross-platform access will soon skyrocket.

the way forward

Fabric is still in its infancy, but it has tremendous potential if used correctly. It can serve a variety of use cases across a variety of industries. OneLake also brings significant changes from a data governance and data cataloging perspective. Any underlying database or data warehouse in an open format encourages people from various cloud platforms to access and share data with Azure users. One thing that gets lost in the big changes is integration with MS Teams and Office apps. This will help non-technical teams who need insight. Several additional products planned as part of Fabric look promising. Semantic linking, data mirroring, dynamic lineage, Spark Autotune, Copilot integration of data factories and notebooks, Git integration, REST API for warehouses, workspace enhancements, etc. are a few things that pique our interest as data engineers.