Posted: December 18, 2023 7:01 AM Updated: December 18, 2023 7:01 AM

Correction and fact check date: December 18, 2023, 7:01 AM

briefly

OpenAI has released a Prompt Engineering guide for GPT-4 that provides detailed insights on how to improve the efficiency of LLM.

OpenAI, an artificial intelligence research institute, has released a prompt engineering guide for GPT-4. This guide provides detailed insights into optimizing the efficiency of language models (LLMs).

This guide explains strategies and tactics that can be combined to increase efficiency, includes example prompts, and provides six key strategies to help users maximize the effectiveness of their models.

clear instructions

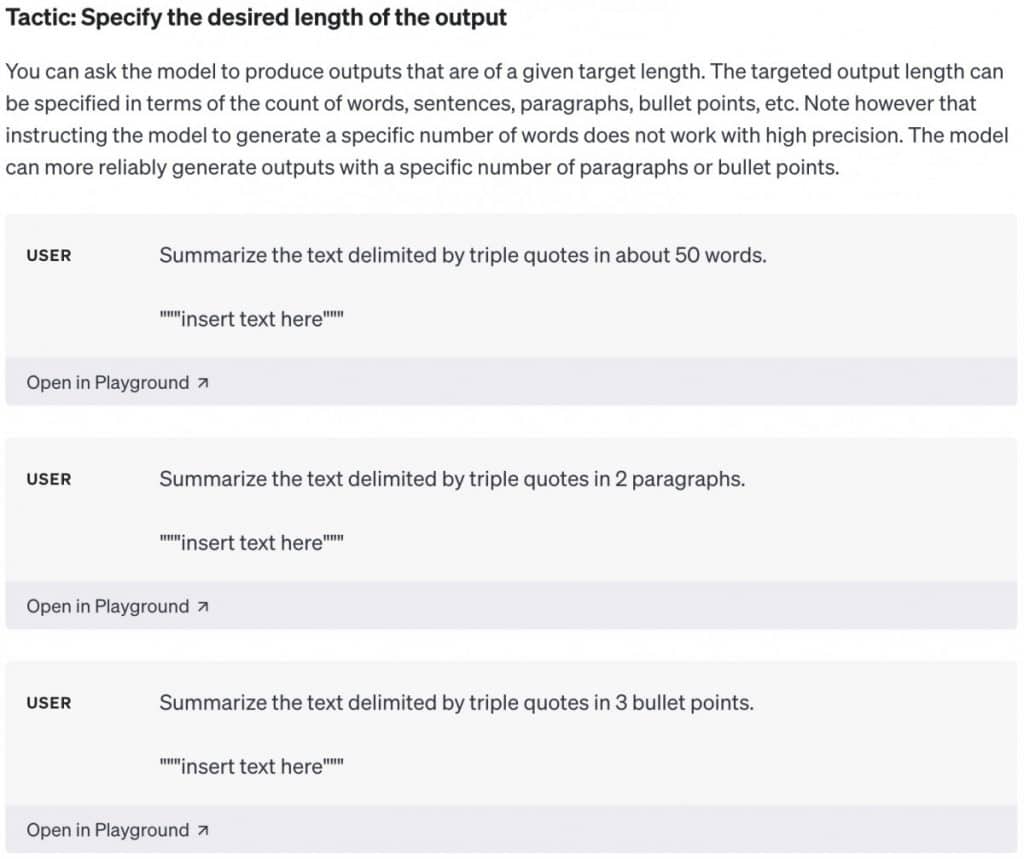

The LLM model lacks intuition. If the output is too broad or simple, users should request a brief or expert-level answer. The clearer your instructions, the more likely you are to achieve the desired results.

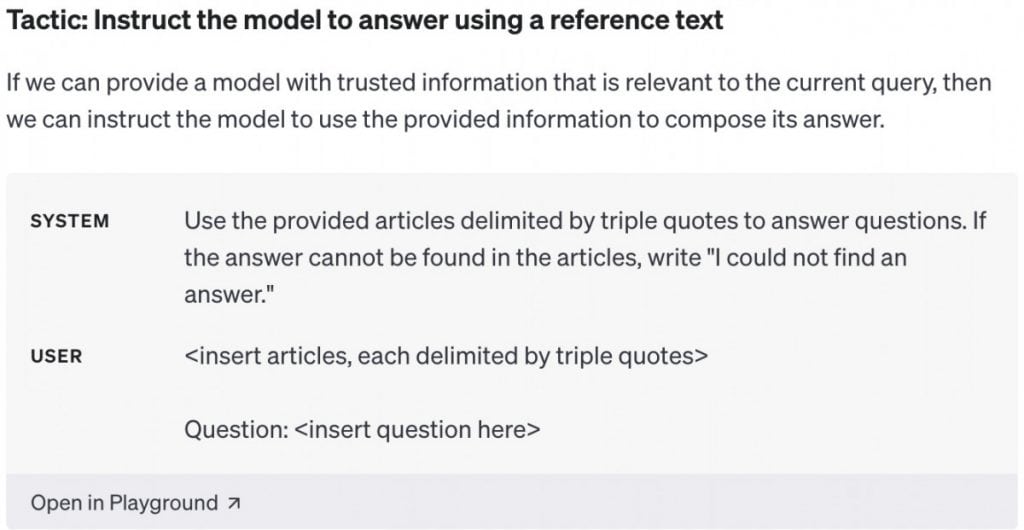

Reference text provided

Language models can produce inaccurate responses, especially on ambiguous topics or when requesting citations and URLs. Just as notes help students, providing reference text can improve the accuracy of your model. Users can instruct the model to answer using reference text or provide reference text.

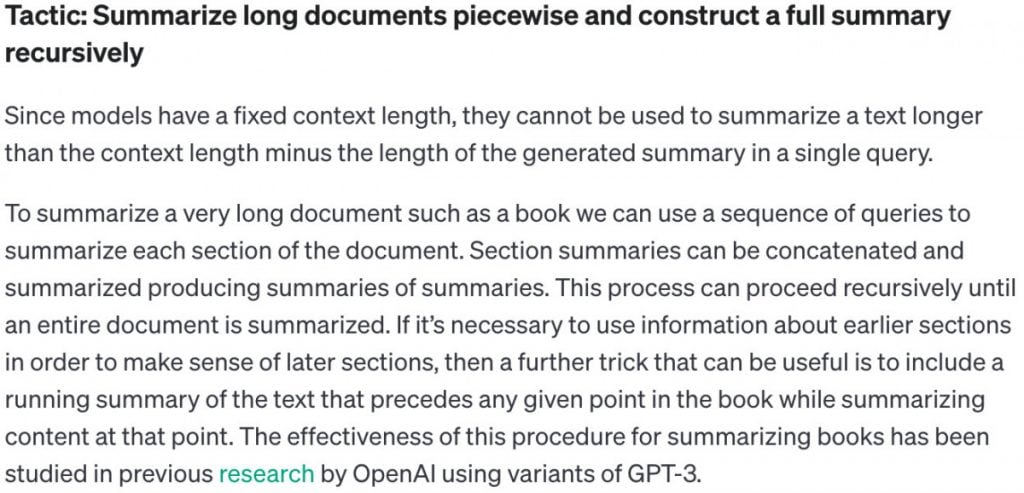

Break down complex tasks into simpler instructions

Users must decompose complex systems into modular components to improve performance. Complex tasks often have higher error rates than simple tasks. Moreover, complex tasks can be redefined into workflows of simpler tasks. Here, the output of the previous operation constitutes the input of the subsequent operation.

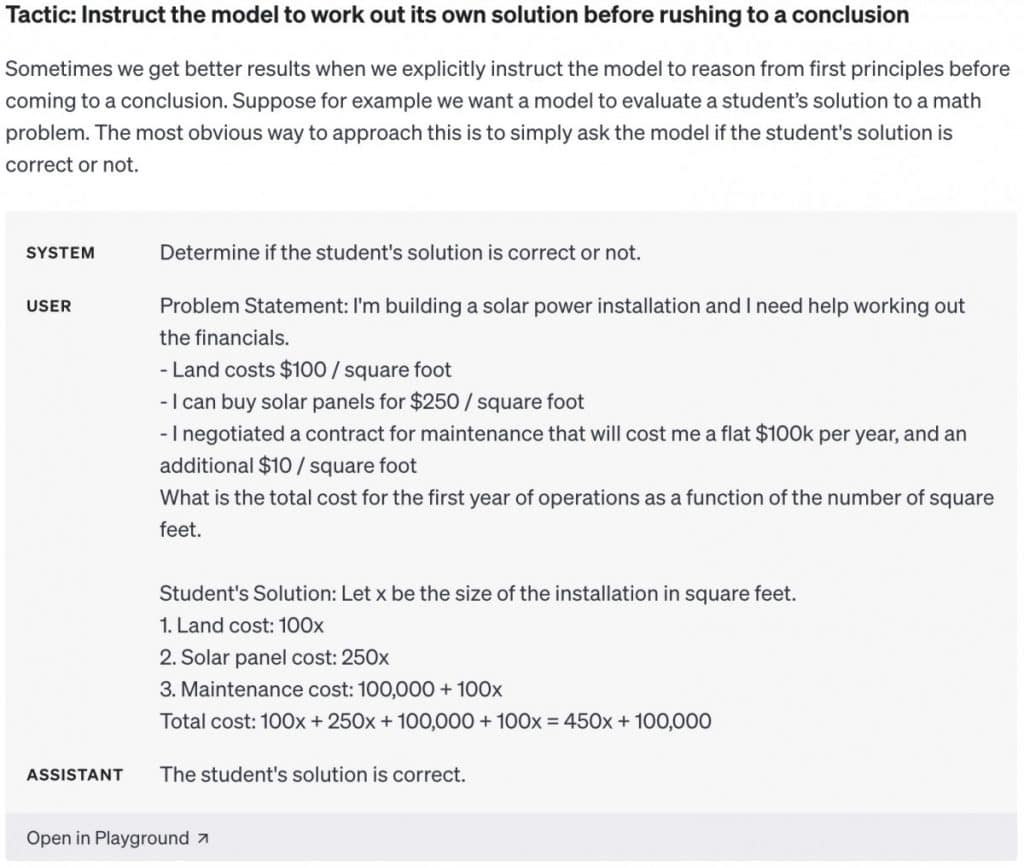

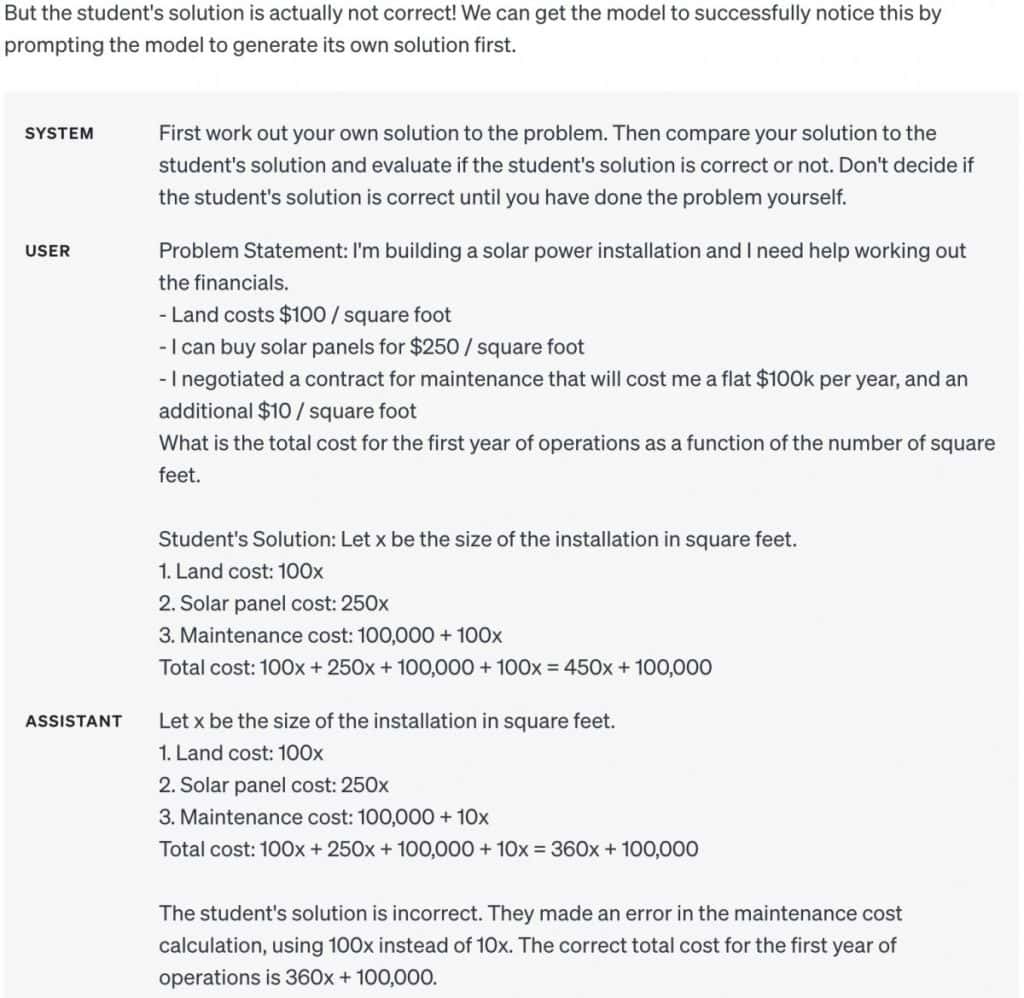

Models require analysis time.

LLM models are prone to inference errors when providing immediate responses. Asking for a “chain of thought” before receiving an answer can help the model reason toward a more reliable and accurate response.

Users must utilize external tools

Offset the limitations of your model by providing output from other tools. Code execution engines, such as OpenAI’s Code Interpreter, can support mathematical calculations and code execution. If a tool can perform a task more reliably and efficiently, consider offloading it for better results.

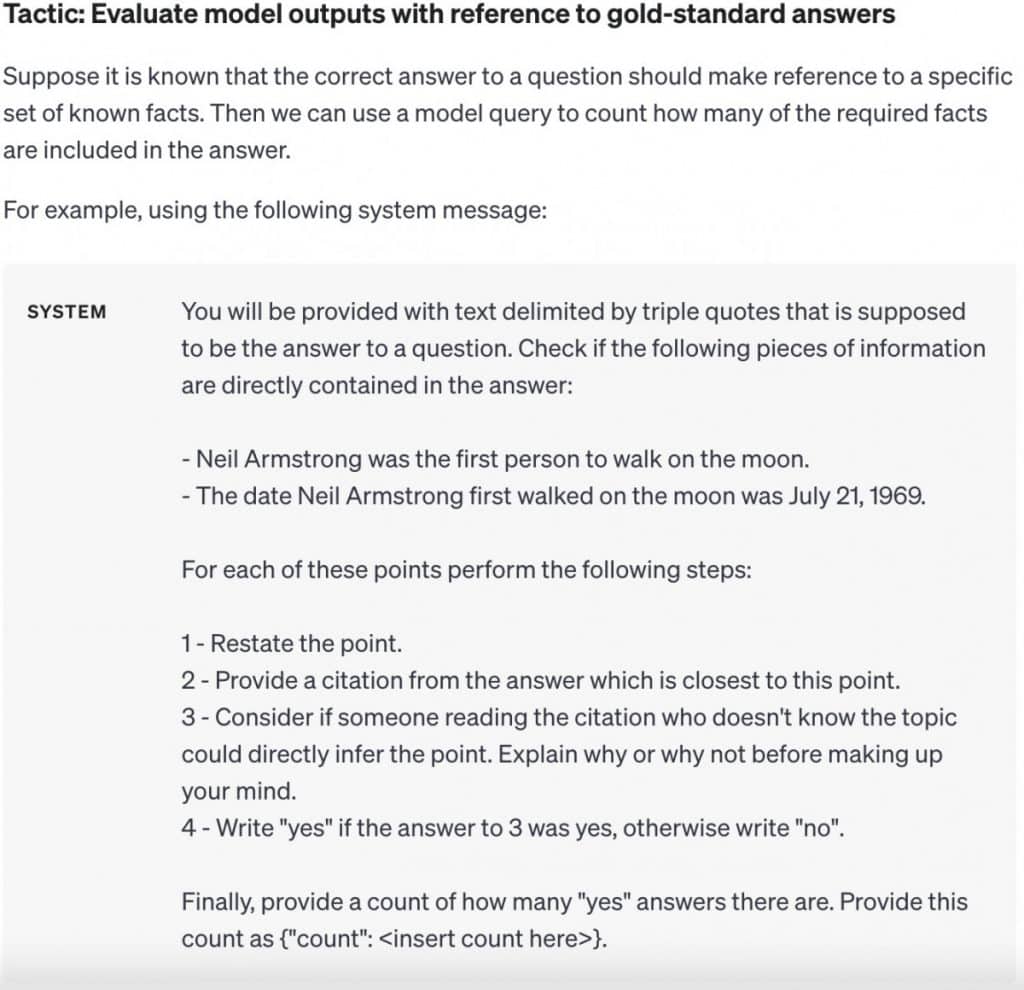

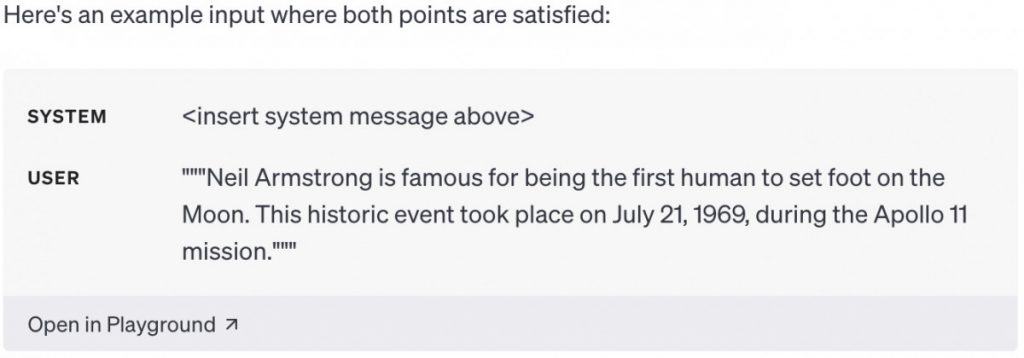

Systematically test changes

By quantifying this, performance improvement is possible. Changing prompts may improve performance in certain situations, but may decrease overall performance. Building a comprehensive test suite can be essential to ensure that changes contribute positively to performance.

The Prompted Engineering Guide for GPT-4 allows users to improve the effectiveness of LLM through explicit methods and tactics that ensure optimal performance in a variety of scenarios.

disclaimer

In accordance with the Trust Project Guidelines, the information provided on these pages is not intended and should not be construed as legal, tax, investment, financial or any other form of advice. It is important to invest only what you can afford to lose and, when in doubt, seek independent financial advice. Please refer to the Terms of Use as well as the help and support pages provided by the publisher or advertiser for more information. Although MetaversePost is committed to accurate and unbiased reporting, market conditions may change without notice.

About the author

Alisa is a reporter for Metaverse Post. She focuses on everything related to investing, AI, metaverse, and Web3. Alisa holds a degree in Art Business and her expertise lies in the fields of art and technology. She developed a passion for journalism through writing about VCs, notable cryptocurrency projects, and participating in science writing.

more articles

alice davidson

Alisa is a reporter for Metaverse Post. She focuses on everything related to investing, AI, metaverse, and Web3. Alisa holds a degree in Art Business and her expertise lies in the fields of art and technology. She developed a passion for journalism through writing about VCs, notable cryptocurrency projects, and participating in science writing.